DeepSeek has released a new paper,??? ?? ???? with co-founder Liang Wenfeng credited as a contributor, detailing how its latest large language model DeepSeek-V3 achieves efficient training and inference using only 2,048 H800 GPUs – significantly fewer than the tens of thousands typically required. The team attributes this efficiency to four key innovations: memory optimization through multi-head latent attention (MLA), computational savings via a Mixture-of-Experts (MoE) design with FP8 precision, communication improvements using a multi-plane network topology, and faster inference through multi-token prediction (MTP). With MLA, KV cache memory usage is cut to just 70KB per token, up to 1/7 that of competing models. MoE architecture activates only 37 billion of the model’s 671 billion parameters per forward pass, reducing training costs by 90% compared to dense models. FP8 training further halves compute and memory usage, with minimal accuracy tradeoff. Beyond the model, the paper also outlines five future directions for AI hardware design, advocating for tighter integration between software and hardware to address memory, compute, and networking bottlenecks. [36Kr, in Chinese]

Anaheim Honors Patti Hirahara with Proclamation

Anaheim Honors Patti Hirahara with Proclamation

JANM Announces In

JANM Announces In

Mobile Showers Address Basic Need for Homeless

Mobile Showers Address Basic Need for Homeless

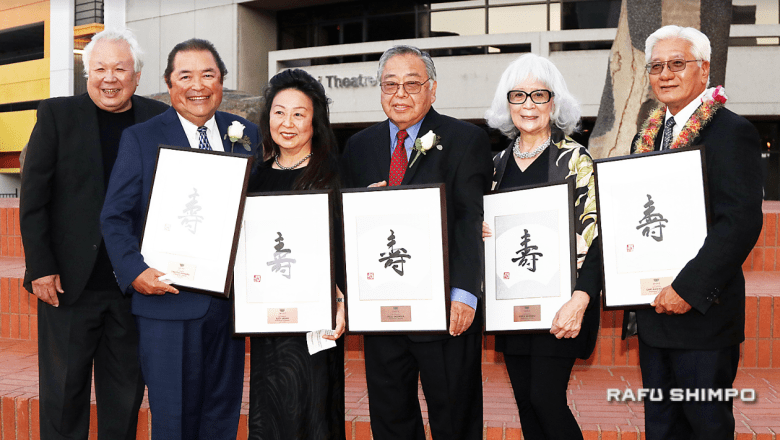

JACCC Celebrates 41st Anniversary with Kansha

JACCC Celebrates 41st Anniversary with Kansha

Man Gets Life Sentence for Kidnapping, Torture, Mutilation

Man Gets Life Sentence for Kidnapping, Torture, Mutilation

High Sloth NFTs Sold Out in 29 Minutes for $1.2 Million Dollars

High Sloth NFTs Sold Out in 29 Minutes for $1.2 Million Dollars

Funds Being Raised to Repair Damage at Shofuso

Funds Being Raised to Repair Damage at Shofuso

KSCA Adds Tea Ceremony to May 28 Bazaar

KSCA Adds Tea Ceremony to May 28 Bazaar

Torrance Optometrist Receives ‘Best in South Bay’ Award for 9th Year

Torrance Optometrist Receives ‘Best in South Bay’ Award for 9th Year

Salute for a 442nd Soldier

Salute for a 442nd Soldier

OBITUARY: Playwright Wakako Yamauchi, Remembered for ‘And the Soul Shall Dance’

OBITUARY: Playwright Wakako Yamauchi, Remembered for ‘And the Soul Shall Dance’

SFVHBT Obon Festival Set for June 24

SFVHBT Obon Festival Set for June 24

‘No No Girl’ Screening at ESGVJCC

‘No No Girl’ Screening at ESGVJCC

2023 Japanese Cultural Bazaar at Buddhist Temple of San Diego

2023 Japanese Cultural Bazaar at Buddhist Temple of San Diego

Challenger Astronaut Onizuka Remembered

Challenger Astronaut Onizuka Remembered

More Than Names on a Wall

More Than Names on a Wall

Honor on Parade

Honor on Parade

IT PAYS TO KNOW: Hiring In

IT PAYS TO KNOW: Hiring In

OBITUARY: Mas Fujimoto, 92; Longtime Koyasan Troop 379 Scout Leader

OBITUARY: Mas Fujimoto, 92; Longtime Koyasan Troop 379 Scout Leader

Top Value and Huobi Launch a Blockchain Mining Fund

Top Value and Huobi Launch a Blockchain Mining Fund

BinaryX Launches Strategy Game CyberChess With $500,000 Prize PoolVega Squadron qualify after beating LiquidMedia and Consultancy Startup PANONY Valued at $100MWest L.A. UMC Hosts Asian Cultural BazaarKulfi Finance Introduces Fixed Rate Lending and Borrowing Protocol on CardanoScreening of ‘The Motel’ at JANMBlockchain Economy Dubai Summit Is Just Around the CornerMintology Announces the Launch of New Brand Centric Claimable NFT PlatformSuper Gremlin announces Metaverse expansion by acquiring a prime location in DecentralandCopium Protocol NFT Pre Mint Goes Live The Year History Died EPA will now classify wood burning as carbon neutral, except it isn't Best smartwatch deal: Save $40 on the Fitbit Versa 4 New ExoMars photo shows Mars bathed in dramatic light Cows could be the largest mammals left on Earth in 300 years Astronomers are out looking for long Scott Pruitt proposes EPA limit agency's use of scientific studies No Re-Turning Point, U.S.A. Watch SpaceX send NASA's alien Best Fire TV Spring Sale deal: Save $150 on the Insignia 70

0.141s , 9827.1484375 kb

Copyright © 2025 Powered by 【??? ?? ????】Enter to watch online.DeepSeek reveals cost,Global Perspective Monitoring